Enhance your coding workflow with Codex CLI, a local terminal tool that brings the reasoning power of advanced AI models (including future GPT-4.1 support) to your fingertips.

The newest models in o-series, o3 OpenAI and o4-mini, are being released. These models are trained to consider for extended periods of time before reacting. These are the most intelligent models OpenAI has ever produced, and they mark a significant advancement in ChatGPT’s functionality for both novice and expert users. Their reasoning models can now use and integrate all of ChatGPT’s tools agentically for the first time. This includes online searches, Python analysis of uploaded files and other data, in-depth reasoning about visual inputs, and even picture generation.

In order to tackle more complicated issues, these models are trained to reason about when and how to apply tools to generate thorough and considered solutions in the appropriate output formats, usually in less than a minute. As a result, they are better equipped to handle complex queries, which is a step towards ChatGPT becoming more agentic and capable of carrying out activities on your behalf. Setting a new standard for intelligence and utility, the combination of cutting-edge reasoning with complete tool access results in noticeably better performance on real-world activities and academic benchmarks.

What’s changed

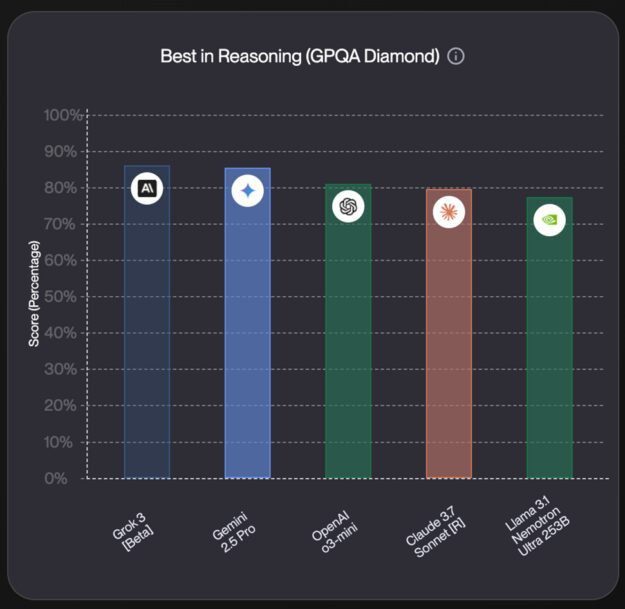

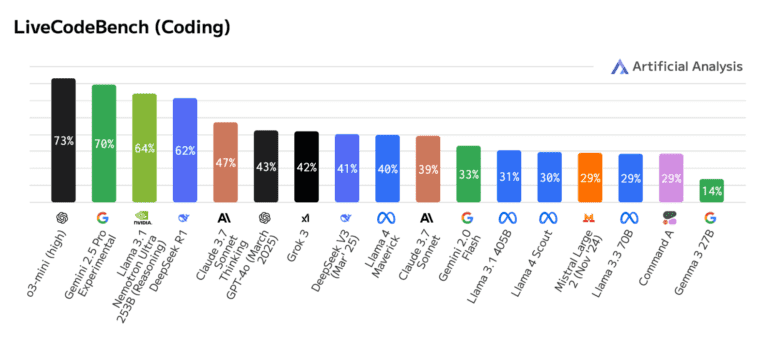

It’s most potent reasoning model, o3 OpenAI, is pushing the boundaries of coding, arithmetic, physics, visual perception, and other fields. It establishes a new SOTA on benchmarks such as MMMU, Codeforces, and SWE-bench. It’s perfect for complicated questions that call for multifaceted investigation and whose solutions might not be immediately clear. It excels at visual activities, such as analysing charts, graphics, and photographs.

According to assessments conducted by outside specialists, O3 performs 20 percent better than OpenAI O1 on challenging, real-world activities, particularly when it comes to programming, business/consulting, and creative ideation. Early testers praised its capacity to produce and critically assess original theories, especially in the fields of biology, mathematics, and engineering, as well as its analytical rigour as a thinking partner.

A smaller model designed for quick, economical reasoning, OpenAI o4-mini performs quite well for its size and price, especially in tasks involving arithmetic, coding, and visuals. On AIME 2024 and 2025, it is the benchmarked model with the best performance. According to expert assessments, it also performs better than its predecessor, OpenAI o3-mini, in fields like data science and non-STEM applications. For queries that benefit from reasoning, o4-mini is a powerful high-volume, high-throughput choice because of its efficiency, which allows for far larger use limitations than o3.

Due to increased intelligence and the incorporation of online resources, external expert assessors assessed both models as showing better instruction following and more practical, verifiable replies than their predecessors. These two models should also seem more conversational and natural than earlier versions of reasoning models, particularly as they use memory and previous discussions to personalize and contextualize replies.

Continuing to scale reinforcement learning

As o3 OpenAI has evolved, it has shown that the “more compute = better performance” tendency seen in GPT-series pretraining also appears in large-scale reinforcement learning. This time, by repeating the scaling route in RL, it has extended training compute and inference-time reasoning by an extra order of magnitude while still observing noticeable performance increases, confirming that the more the models are given freedom to think, the better they perform. o3 OpenAI performs better in ChatGPT at the same latency and cost as OpenAI o1, and OpenAI has confirmed that its performance increases with longer thinking times.

Additionally, it used reinforcement learning to teach both models how to utilise tools and to make decisions about when to employ them. They are more competent in open-ended scenarios, especially those requiring visual reasoning and multi-step workflows, since they can deploy tools according to intended outcomes. According to early testers, this improvement is seen in both academic benchmarks and real-world activities.

Thinking with images

These models are able to incorporate visuals directly into their mental process for the first time. They ponder about a picture rather than merely seeing it. Their cutting-edge performance across multimodal benchmarks reveals a new kind of problem-solving that combines textual and visual thinking.

The model can comprehend images that are low quality, hazy, or inverted, such as hand-drawn sketches, textbook diagrams, or whiteboard photos that people post. As part of their reasoning process, the models can employ tools to dynamically rotate, zoom in, or change pictures.

These models address previously unsolvable problems by achieving best-in-class accuracy on visual perception challenges.

Limitations

Currently, thinking with visuals has the following drawbacks:

- Overly lengthy lines of reasoning: Models may execute pointless or redundant tool calls and picture manipulation operations, leading to excessively lengthy thought chains.

- Errors in perception: Basic perceptual errors can still occur in models. Visual misinterpretations can result in inaccurate final responses, even when tool calls are made correctly and progress the reasoning process.

- Dependability: During several iterations of a problem, models may try various visual reasoning techniques, some of which may provide inaccurate conclusions.

Toward agentic tool use

The o3 OpenAI and o4-mini have complete access to ChatGPT’s tools as well as the ability to call functions in the API to create your own unique tools. In order to provide thorough and well-considered responses in the appropriate output formats in less than a minute, these models are trained to reason about problem-solving, deciding when and how to employ tools.

By combining many tool calls, the model may produce Python code to create a forecast, create a graph or graphic, and explain the main elements influencing the prediction. It can also search the web for public utility data. The models’ ability to reason enables them to respond to new information and change course as necessary. For instance, individuals can use search engines to conduct many online searches, examine the results, and try new searches if they require further information.

The models can handle tasks that call for access to current information outside their own knowledge, extended reasoning, synthesis, and output production across modalities with this adaptable, strategic approach.

The most intelligent models it has ever released are o3 OpenAI and o4-mini, which are also frequently more effective than their predecessors, OpenAI o1 and o3-mini. For instance, the cost-performance frontier for o3 strictly improves over o1, and the frontier for o4-mini strictly improves over o3mini in the 2025 AIME maths competition. In general, it anticipate that o3 and o4-mini will be more intelligent and less expensive than o1 and o3-mini, respectively, for the majority of real-world applications.

Security

Every increase in the model’s capabilities calls for corresponding increases in safety. It has redesigned safety training data for o3 OpenAI and o4-mini, including new refusal prompts in areas like malware production, jailbreaks, and biological dangers (biorisk). O3 and O4-mini have performed well on internal rejection benchmarks (such as instruction hierarchy and jailbreaks) as a result of this updated data.

OpenAI has created system-level mitigations to identify hazardous prompts in frontier risk regions, in addition to outstanding performance for model refusals. It trained a thinking LLM monitor that operates from human-written and interpretable safety standards, much like previous work in image generation. In human red-teaming operation, this monitor effectively detected around 99 percent of talks when applied to biorisk.

OpenAI used most stringent safety approach to date to stress test both models. It assessed o3 and o4-mini in the three monitored capacity areas biological and chemical, cybersecurity, and AI self-improvement that are covered by revised Preparedness Framework. Based on the outcomes of these assessments, it has concluded that in all three areas, o3 and o4-mini continue to fall below the Framework’s “High” level. The comprehensive findings from these assessments are available in the system card that goes with it.

Codex CLI: frontier reasoning in the terminal

Additionally, it is revealing a brand-new experiment: Codex CLI, a portable coding agent that you can launch from your terminal. It operates directly on your PC and is made to optimise the reasoning power of models such as o3 and o4-mini. Other API models, such as GPT‑4.1, will soon be supported.

By providing the model with low-fidelity drawings or images, along with your code locally, you may use the advantages of multimodal reasoning from the command line. To link models to consumers and their computers, it consider it a minimum interface.

In addition, it is starting a $1 million program to help projects that use OpenAI models and Codex CLI. Grant submissions in increments of $25,000 USD in the form of API credits will be reviewed and accepted.

Access

Starting currently, o1, o3‑mini, and o3‑mini‑high will be replaced with o3, o4-mini, and o4-mini-high in the model selector for ChatGPT Plus, Pro, and Team users. Users of ChatGPT Enterprise and ChatGPT Edu will be able to access it within a week. By choosing ‘Think’ in the composer before to submitting their question, free users can test out o4-mini. All plans’ rate limits are the same as they were in the previous set of models.

In a few weeks, it anticipate releasing o3 OpenAI-pro with complete tool support. O1-pro is still accessible to Pro users as of right now.

Currently, developers may access both o3 and o4-mini using the Chat Completions API and Responses API. However, in order to use these models, certain developers will need to confirm their organisations. The Responses API will soon feature built-in tools like web search, file search, and code interpreter within the model’s reasoning.

It also supports reasoning summaries and the ability to maintain reasoning tokens around function calls for improved speed. Explore documentation to get started, and check back for future updates.

o3 OpenAI and o4-mini are now Available in Microsoft Azure OpenAI Service

The most recent versions of the o-series of models, the o3 OpenAI and o4-mini models, are now available on Microsoft Azure OpenAI Service in Azure AI Foundry and GitHub, according to Microsoft Azure.

What’s next

OpenAI is merging the specialised thinking skills of the o-series with more of the natural conversational abilities and tool usage of the GPT-series, which is reflected in releases. It’s future models will enable proactive tool usage, sophisticated problem-solving, and smooth, organic discussions by combining these characteristics.